When you’re analyzing survey data, you may from time to time end up with results that appear too good, too bad, or too incredible to be true. And sometimes they are. One way to verify the accuracy of your findings is to look at the statistical significance of your results, which can be done by statistical significance testing.Statistical Significance ExplainedStatistical significance helps you determine if the results of your analysis are likely to have happened by chance, or if they truly are an accurate reflection of reality.When you conduct a survey or other research, the analysis is based on the sample of a population, not the entire population as a whole. The hope is that the sample reflects the attitudes of the entire group, but that may not always be the case.Analyzing statistical significance is a great way to determine the accuracy and believability of your research. An example will help explain the concept further.Example of Statistical SignificanceLet’s say you have recently launched a new product designed for younger customers and are looking to understand if it sells better to consumers aged 18-30 than to those aged 31+. After analyzing a subset of total sales by age group, you determine that consumers aged 18-30 spend, on average, $5 per month more on the product than consumers aged 31+. Does this mean that your product is destined for success with millennials across your entire customer base? Not necessarily. Further research could reveal that the product performs equally well with those aged 30+. The sample of sales you selected for your research may not have given you an accurate representation of your entire customer base a whole.When the results of a sample do not accurately reflect the results you would get from the entire group, the inconsistency is known as a sampling error.There are two main contributors to sampling errors:

- Sample size

- Variation in the overall population

Sample SizeThe larger your sample size, the greater the likelihood that the sample accurately reflects the entire group as a whole (assuming the sample is scientifically designed and random). A group of 100 customers will more accurately reflect your entire customer base of 1,000 customers than a group of 10 customers would. The larger your sample, the more accurate your results are inclined to be.Population VariationThe more variation your overall population has, the higher your chances of sampling error. That’s because you have a higher chance of ending up with a group that is not representative of the overall average of the group.If you had a customer base of 100 customers, for instance, and 80 percent of them spent the overall average amount of $10 per purchase, you could choose a smaller sample and be confident in your results.But if only 20 percent of your group of 100 spent the overall average of $10 per purchase, a smaller sample would be more prone to sampling error. The overall average spend may still be $10 per purchase, but you would have a greater number of people who spend significantly more or significantly less than the average.Choosing a sample from the latter population would increase the likelihood of sampling error because you may end up with an unbalanced sample of people who spent significantly more or less than the average.Evaluating Statistical SignificanceVerifying the accuracy of your results can be done by evaluating, or testing for statistical significance. This process involves several steps. The first is to establish a null hypothesis, or something you’re trying to disprove, along with an alternate hypothesis, or something you’re trying to prove. For example, in our new product launch case the null and alternative hypotheses are as follows:

- Null hypothesis (Ho): Consumers aged 18-30 spend exactly as much as consumers aged 30+ on the new product

- Alternate hypothesis (Ha): Consumers aged 18-30 spend a different amount on the new product

Once you establish a null hypothesis and alternate hypothesis, you can then move forward to determine the calculated probability, or “p-value.” The p-value expresses the likelihood of the null hypothesis being true or false.

- Small p-values show strong evidence the null hypothesis is NOT true, or can be rejected, meaning the observed results are statistically significant. The typical p-value range here is 0.05 or less.

- Larger p-values denote the statistical evidence is too weak to reject the null hypothesis, meaning that the null hypothesis is more likely to be true and the observed results are NOT statistically significant. P-values greater than 0.05 typically fall into this category.

While the typical p-value cut-off is 0.05 for the 95% confidence level, you can adjust the value to align with your specific survey or experiment. Other typical p value cut-offs are 0.1 at the 90% confidence level or 0.01 at the 99% confidence level.Statistical Significance Dos and Don’ts Because good survey analysis software calculates statistical significance for you, it’s not necessary for company managers and executives to deep dive into all the details. It is important, however, to have an overall understanding of statistical significance so it’s not misused or misconstrued. A few do’s and don’ts include the following:

- Do be wary of using the word “significant” with data findings unless you are actually discussing the statistical significance. Find other language to express importance or relevance in other scenarios.

- Do review the statistical significance of every experiment or survey going forward, if you haven’t been doing so already. Ask your analyst to report it along with the rest of the results.

- Don’t make statistical significance the end-all for assuming your results are accurate. We discussed sampling errors above, but non-sampling errors are actually more common. Non-sampling errors occur when your data is invalid due to issues such as response errors, mistakes in recording or coding data, coverage errors, etc.

Where Statistical Significance Fits with Market ResearchGiven that market research provides the voice of the customer to organizations and the foundation for strategies meant to improve products and customer satisfaction, it is critically important that the insights generated from research professionals are valid. If research insights are not sound, strategies can be created that distract or move the business off the correct course of action.Statistical significance is a particularly useful tool for researchers to use to determine the validity of insights and to measure the strength of trends over time. Let’s revisit our new product launch for an example.If preliminary sales data shows that consumers aged 18-30 spend $5 more per month on your new product, that could have significant business implications on marketing and product strategy. If the p-value of this phenomenon is determined to be 0.02, there is strong evidence that your null hypothesis (i.e., consumers aged 18-30 will spend the same amount on the new product as consumers aged 30+) can be rejected and that, moving forward, younger consumers will indeed spend more on the new product. You may then confidently decide to plan and forecast accordingly. However, if the statistical significance test of the preliminary sales data produces a p-value of 0.07 (or any number greater than 0.05), your null hypothesis must be accepted, indicating that further analysis should be performed before you conclude that the new product will generate more sales amongst younger consumers.ConclusionStatistical significance testing is a powerful tool for researchers to validate their insights and gives credibility to research. Although calculating statistical significance is typically performed with the click of a mouse in survey analysis software, managers and executives can have more confidence in its efficacy if they understand the basics of statistical significance outlined in this post.

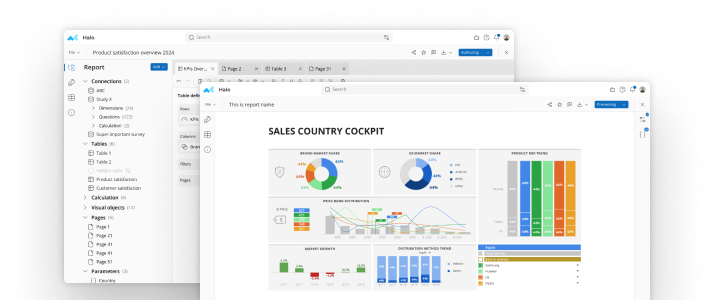

See mTab Halo in Action

Make smarter decisions faster with the world's #1 Insight Management System.