Assessing a survey’s validity is essential to ensure you obtain meaningful data, and Cronbach’s alpha (CA) is a useful measurement to use during the survey validation process.

What is Cronbach's Alpha?

Cronbach's alpha is a statistical measure used to assess the reliability or internal consistency of a scale or survey questions. It measures the extent to which the items on the scale are interrelated or correlate with one another. Cronbach's alpha ranges from 0 to 1, with higher values indicating greater internal consistency or reliability of the scale. Generally, a Cronbach's alpha value of 0.7 or higher is considered acceptable for research purposes. This measure is commonly used in fields such as psychology, education, and sociology to ensure that the measures used to assess constructs such as attitudes, beliefs, or personality traits are reliable and valid.

Also known as Cronbach alpha or Cronbachs alpha, is ideal for measuring the internal consistency among survey questions that:

- You believe all measure the same factor

- Are correlated with one another

- Can be constructed into some type of scale

Role as Reliability Measure

As a reliability measure, CA basically lets you know if a survey respondent would give you the same response with regard to a specific variable if you were to present that variable to the respondent again and again.While you could actually present the same variable to the same respondent by asking the person to fill out the same survey multiple times, that solution is not a feasible one. Not only does the undertaking run the risk of annoying respondents, but is also time consuming and costly. Measuring internal consistency using CA can instead solve the problem.

CA Values

Cronbach alpha values range from 0 to 1.0, with many experts saying the value must reach at least 0.6 to 0.7 or higher to confirm consistency. If you find a lower value while assessing your CA, some programs let you to reassess CA after removing a specific question. This allows you to pinpoint and eliminate questions that do not produce consistent responses to increase the accuracy of your survey.

How Cronboch's Alpha Works

Let’s say you’re collecting automotive industry survey data and you want to determine the level of anxiety motorists experience while driving. While you could certainly outright ask respondents about their anxiety levels, self-reporting with a single question in this type of area may not always produce the most accurate results.You could instead ask a series of questions that all measure anxiety levels, and then combine the responses into a single numerical value that helps you more accurately assess overall anxiety.

To achieve this, you can first devise a table with 10 items that record the degree of anxiety respondents experience in certain driving situations. Score each item from 1 to 4, with 1 indicating no anxiety and 4 indicating high enough levels of anxiety to make them stop driving. You then sum up the scores on all 10 items to determine the final score.

For these questions to be accurate, they need to have internal consistency. Because the questions all measure the same factor, they need to be correlated with one another. Applying CA to the questions helps to ensure they are, with CA values typically increasing when the correlations between the questions increase.

CA is generally used to determine internal consistency among questions that are based on a scale, such as the example above, or questions with two possible answers.

CA Caveats

CA can be a valuable measurement, but it does come with a few issues of which you should be aware.

- The alpha measurement in CA can only accurately estimate reliability if the questions are τ-equivalent. That means the questions can have different variances and means, but their co-variances need to be equivalent, implying they have at least one common factor.

- Alpha relies not only on the degree of correlation among the items, but also on the number of items in your scale. If you keep increasing the number of items on your scale, the similarities among questions can appear to increase – even though the average correlation remains unchanged.

- If two different scales are each measuring a specific aspect and you combine them to create a single, longer scale, your CA measurements will again be skewed. Alpha would likely be high by combining the two scales, although the combined scale is looking at two different attributes.

- An extremely high alpha value is not always a positive thing. Alpha values that are too high may suggest significant levels of item redundancy, or items asking the same question in subtly different ways.

Despite the potential issues, CA remains a useful statistic in the survey validation process, especially if you enlist help from solid resources and survey professionals while employing it. Whether you’re collecting insurance survey data or measuring customer loyalty, CA can help ensure your questions are internally consistent, your survey valid and your results on the mark when it comes time to analyze survey data.

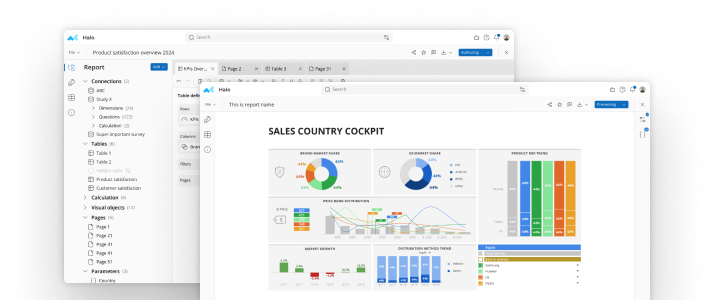

See mTab Halo in Action

Make smarter decisions faster with the world's #1 Insight Management System.